Concepts Every Python Engineer

In computer science, Concepts Every Python Engineer understanding how the hardware works hand in hand with software is extremely critical, particularly for beginners. This understanding not only helps solidify new concepts but also provides an intuitive grasp of their origins.

One widely encountered concept that almost every programmer has at least some farce idea of, is asynchronous programming. No matter your tech stack, you would’ve come across asynchronous programming.Another fundamental Concept is Parallel processing. You’ll most likely find this concept as bread and butter in fields involving complex mathematical operations, such as machine learning, or the rendering of intensive graphics, like in game development.”

This post highlights similarities, differences, and relations between all these concepts and what makes them so useful.

Contact me on Upwork for software development jobs relating to Python/SQL.

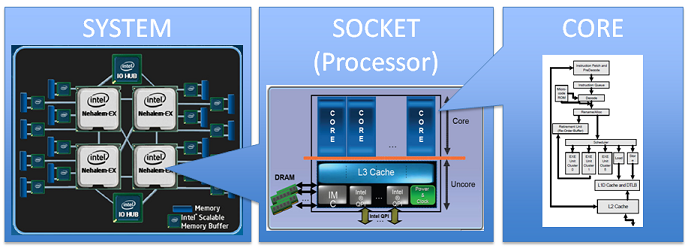

What is a processor?

A processor, also called CPU, is simply a hardware that can run multiple operations/tasks at the same time.

What is a processor core?

A processor core, functions as a mini-processor. It is the building block(s) of a processor.

Traditionally, a single processor core could execute only one task or program at a time. To simulate the simultaneous execution of multiple tasks, processors rapidly switched between tasks. The switching time was so brief that humans couldn’t perceive it. For example, switching between Chrome and antivirus could take just 1 nanosecond.

In today’s technology landscape, processors can incorporate multiple cores, allowing them to genuinely handle multiple tasks simultaneously. Each core is assigned a specific task.

If a processor has, for instance, 4 cores, it can manage up to 4 compute operations or tasks concurrently.

Apple’s M1 chip, for example, boasts of 4 cores.

So what actually runs tasks are the processor core(s) and not the “processor”. The term ‘processor’ is just a higher-order name, akin to calling a collection of three lines a ‘triangle.’

What is a process in computer science?

You know when you download a program and it has .exe extension? Say you wanted to start this program. You proceed to double-click on it. A set of instructions on the program file is executed and the program is initiated.

This instantiation is what is called a process. In essence, a process is the active occurrence or instance of a program.

No one knows exactly when a program that has been initiated, transitions to a process. But most likely, if it has been allocated a space in the RAM or has been given a processID, its process has instantiated.

A program is simply just the executable file on your disk storage. A process is what spawns out from executing that file. A program consumes disk space but doesn’t consume CPU resources. A process consumes CPU resources but doesn’t consume disk space.

A process can be in one of the following states: New, Ready, Running, Waiting, Terminated. A program doesn’t have a state.

Now, The Big Boy…THREADS and How It All Connects to Asynchronous.

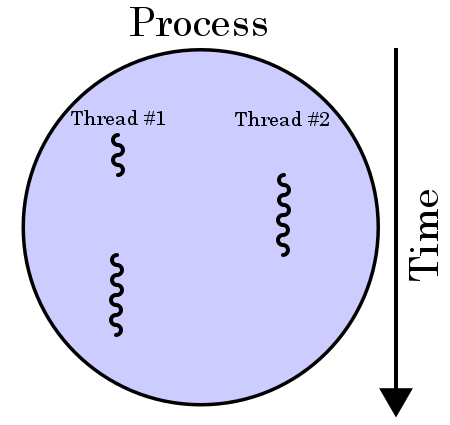

Threads are simply agents that carry out instructions in a process. They are always independent of each other i.e. each thread doesn’t rely on information or any form of communication from other threads to carry out an instruction.

They complete tasks at a later time and can have either the same completion time or a different completion time.

A thread doesn’t need other threads to complete or terminate before it reaches its completion. It is fully autonomous.

All threads within a process share allocated computing resources, including memory and address space. This shared address space allows easy accessibility to crucial information for all threads in the process.

As more threads are needed to perform computation, the process is allocated more memory to run its operations.

So what does this have to do with asynchronous programming?

Once you’ve fully understood the meaning & functions of threads, you should by now have some intuition about what’s going on under the hood when executing asynchronous operations.

Put simply, when an asynchronous function is called, a new thread is spawned with the asynchronous function instructions and is burdened with the task of carrying out those instructions.

It’s crucial to note that asynchronous functions are typically not reliant on any external information or function to execute or complete, much like threads.

They also can complete any time they choose without causing delay or obstruction to any other code/program to execute or reach completion — just like threads. This is precisely why threads are employed to handle asynchronous operations.

Note that threads are not the only way to carry out asynchronous operations. But that’s beyond the scope of this post.

FAQ

What’s the difference between asynchronous vs parallel operations?

1# SCENARIO

If you’ve been to a restaurant, you might have noticed that anytime you place an order, your order doesn’t have to wait till someone else’s order is completed. You and 5 other people who placed at the same time, could be collecting your orders at the same time. Imagine how annoying it would be to wait for everyone’s 5 –7 minutes completion time before yours.

2# SCENARIO

Imagine a scenario whereby a road has multiple routes. Kind of like this…

Even if one car breaks down, others can still reach their destination. Each car drives at the same time to their destination.

In a programming context, every function gets executed at the same time, irrespective of the status of other functions, and each function completes roughly at the same time.

Both scenarios are what are called parallel processing.

Put simply, Parallel processing is the running of operations autonomously and side by side(not concurrently).

Asynchronous runs concurrently while parallel processing runs in parallel

Asynchronous operations switch tasks quickly back and forth to give the illusion of parallelism while parallel processing actually runs in parallel.

Asynchronous operations require a delay or wait time, in order to see it’s value. Parallel processing doesn’t.

Asynchronous operations are usually effective with I/O bound operations while parallel processing is effective with CPU bound operations.

Asynchronous operations use threads, coroutines, and task schedulers while parallel processing uses processes.

What’s the difference between a thread and a process?

Threads are agents that execute instructions inside a given process. They share vital information and memory allocated to a process.

Processes on the other hand are simply the instance or occurrence of a program, and they do not share any information or memory space with another process.

What is an example of a process and a thread?

Process — a running Google Chrome browser

Thread — Each open tab within the browser

Process — a running VS code application

Thread — a function/code block that was run within VS code.

Can we have multiple processors in a computer?

Yes. This is called multiprocessing. It’s when we have many processors (usually 2–3 each with multiple cores), in a computer in order to run multiple tasks simultaneously.

This is usually used in servers, supercomputers, or high-end computers, where heavy tasks are run and quick computation is needed. Very unlikely would you see this in a day-to-day consumer laptop.

Why Can’t We Use Multiple Processors in Every Computer?

Using multiple processors in most computers would cost more than it would benefit the consumers and the manufacturers.

This is because a new processor(CPU) has a lot of overhead costs in terms of system management. For instance, getting each CPU to communicate with each other effectively when needed, the motherboard also needs additional hardware to connect those CPU processors to the RAM and other resources.

And most day-to-day activities don’t require heavy computations. Therefore even if multiprocessors were available, it would be redundant and consumers wouldn’t see any significant difference.

When do we use threading instead of multiprocessing?

We use threading when dealing with I/O bound operations such as Network Request, Disk operations (downloading / fetching), etc.

Multiprocessing when dealing with CPU-bound operations such as crunching of numbers, vector multiplication, image processing, etc. Libraries like Numpy use multiprocessing by default.

One thought on “5 Concepts Every Python Engineer Should Know in 2024”