Artificial General Intelligence

What a time to be alive! This is a quote I love from the “Two Minute Papers” channel on YouTube. After the OpenAI’s Hide and Seek reinforcement learning agent video, I started following this channel very closely, and it’s one of my favorites. If you want to watch it, I put the link to this video below because it amazed me 2.5 years ago.

I don’t know if you watched this video, but we’re living in a dawning age of artificial intelligence. It all started with movies about AI like Terminator, A.I., iRobot, Iron Man, Transcendence, etc. In this article, we will talk about AI, these movies, and perspectives about AI, and we will dig deep into their developments and potential futuristic results. There will be no code development but all thinking. However, we should start with understanding AI, deep learning, and their differences.

Artificial General Intelligence vs. Deep Learning

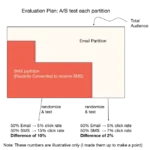

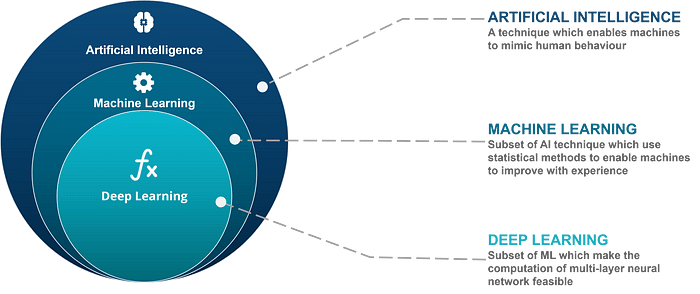

Artificial intelligence is intelligence. It should be similar to human intelligence, or it should be better than it. The critical part here is it should be made by humans; because until now, we couldn’t achieve such a thing. However, we achieved several things: Machine learning and deep learning. What are those? These are specific circumstances of AI problems; basically, they are subsets of AI problems by putting some limitations. If we want to visualize it, we can draw something like this:

Okay, what do we know about artificial intelligence? It’s a challenging problem to solve, and we couldn’t figure it out yet. What about machine learning and deep learning? In order to answer that question, firstly, we need to understand what a function is.

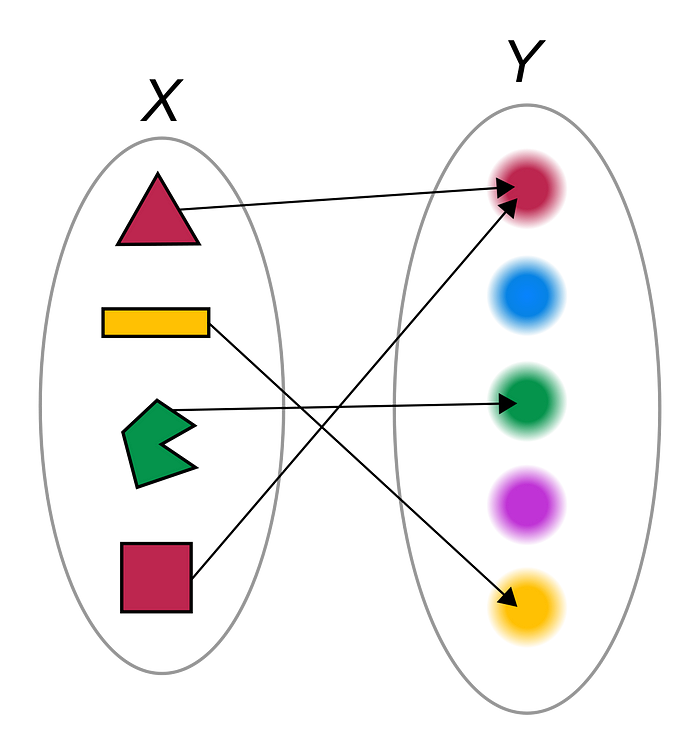

In mathematics, there are sets. They are collections of unique elements. For instance, all positive integers create a set; let’s say it is set A. On the other hand, the square of every positive integer can create another set; let’s say it is set B. Basically, if we find a matching mechanism between every element in A and B, then we will create a function. A self-explanatory function from A to B is f(x) = x². However, this is a one-dimensional function, meaning it gets only one input. We can combine different inputs and results, and we can create such a thing: f(x, y, z) = f(x², y²z), If you want to learn more details about functions, you can search for them or buy a fundamental math book.

Okay, we understood what functions are. However, what is the relevance of deep learning? The good thing about functions is that we can model lots of things as functions. Let me give you an example: let’s say you want to transfer money from bank A to bank B. You should have an account number for bank A, which should belong to you, and a target account in bank B. Also, you should have a credential password for the process; moreover, you need to determine an amount. In addition, you need the ledgers of the banks to update them. Then, we can implement such a function by getting this input list (account number A, credential password, account number B, target amount, ledger of bank A, ledger of bank B). Then, we can implement such a computer function to perform this update on the ledgers of the banks. The steps of this process are obvious. Until recent years, all software engineers have implemented such functionalities in cyberspace. However, what if the steps are not that obvious? An example? Let’s think about such a function that takes an image with its sizes as an input and returns the objects inside it with the coordinates. You can do that with your own eyes, right? It’s pretty easy for our eyes. However, this process’s steps are unclear because each object is different, and each object belonging to a class may not look like others. We can process the difference in our minds, but we’re learning it over the years with experiences. There are no specific steps to perform those operations. Then, how can we create this function?

The solution we developed is deep learning. What we know are the inputs and outputs; what we don’t know are the steps from A to B. Thus, we’re giving this information to a deep learning mechanism and telling the mechanism how well it succeeds. At this point, we’re providing a purpose to the mechanism, decreasing the difference between the result and the actual one. By taking a look at this difference, deep learning approximates a function we desire to go from A to B. This is what deep learning is. An approximation method but a seriously good one.

How far we are from a complete AI?

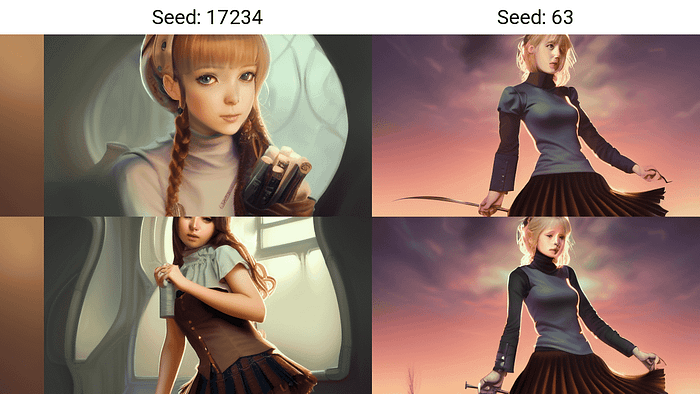

Okay, we started from recognized simple digits. However, nowadays, we created two great things: GPT and stable diffusion. Most of you read about this; recently, ChatGPT has become a legend, which is a chatbot but an awe-inspiring one. On the other hand, stable diffusion can create art pieces in a fascinating way, and it may shock people. I put some examples below:

These are fantastic, aren’t they? If deep learning is just an approximation, how do we do those things? It should be more than just approximations. The honest and genuine answer is no, it doesn’t have to. We started by approximating simple tasks. While playing with the ideas, we improved more detailed, specific mathematical tools to optimize the process, eventually allowing us to generalize more general tasks. For instance, how to visualize a given sentence. Stable diffusion is just a function that approximates this function. On the other hand, ChatGPT is a function that generates the best answer. At this point, some people may accuse me of oversimplifying things because, under the hood, there are lots of amazing mathematical things going on. I do not deny it, but at its core, we just found better approximations for what humans can do. In the beginning, we started with small ones like recognizing letters and digits, but now it evolved to such a thing that it can do what professional people can, and it is definitely far faster than professionals.

Then what is the limitation of this approximation, and can it lead to artificial general or super intelligence? I think deep learning is only limited by two things: the dataset we have about the related tasks and our imagination. By the way, I’m not sure about the first one. Because we can generate more data by using different methods. On the other hand, researchers have continuously developed better algorithms to improve deep learning performance on more generalized tasks. If we can generalize enough, could it become something like a human? Actually, we’re doing this already by creating humanoid robots. I think we can generalize all of the tasks we want, and by creating different AIs whose purposes are different but working collaboratively, we can create good robots who can do lots of things better than humans.

However, it won’t be intelligence. Why? Because, for deep learning algorithms, we have to set a specific purpose, and this mechanism focuses only on these tasks and tries to make them as perfect as it can with the mathematical tools we provided. However, it sticks to only one purpose. It cannot change its purpose, and it cannot decide to change its general structure. Because of that, it’s a subset of the artificial intelligence set.

At this point, if you ask yourself, “What if we set the purpose of deep learning as to create an AI, then what happens?”. It’s an excellent question, but the answer is easy: It won’t be able to do this because deep learning learns by example. Currently, there is no example of artificial intelligence in the world (at least, as I know, there isn’t). Thus, it seems impossible without a person or a research team who finds a way to create intelligence.

At the beginning of this year, I noticed a discussion between Ilya Sutskever and Yann LeCun, both of them are great deep-learning scientists. The discussion happened on Twitter, and it was about whether today’s language models are slightly conscious. I definitely don’t think they have a very tiny, atomic consciousness because consciousness is different, and doing a specific thing ideally is substantially different. You can share your ideas in the comment section, but I support Yann LeCun’s argument. Because I think language is not the essence of consciousness, but it’s an interface to it. In our brains, we have the thing we call consciousness, and we’re sharing the input and outputs of this entity by using language, which is also created by it. Isn’t it amazing? In the beginning, there was no language. Humanity started with simple sounds and signs to communicate with each other, and we developed the language in time with our consciousness to transfer it to others even in the next generations after inventing writing. Hence, I think the conscious is an entirely different thing, and by letting machines to learn the products of it, we won’t be able to create intelligence.

https://cdn.embedly.com/widgets/media.html?type=text%2Fhtml&key=a19fcc184b9711e1b4764040d3dc5c07&schema=twitter&url=https%3A//twitter.com/ylecun/status/1492604977260412928&image=https%3A//i.embed.ly/1/image%3Furl%3Dhttps%253A%252F%252Fabs.twimg.com%252Ferrors%252Flogo46x38.png%26key%3Da19fcc184b9711e1b4764040d3dc5c07

One more note for this section: I’m not saying that creation of an AGI or ASI is impossible. I love Carl Sagan’s quote: “The absence of evidence is not the evidence of absence.” This is the same, we don’t know a way to create a real AI, but it doesn’t mean that it’s impossible.

What will happen if we create a real Artificial General Intelligence?

Starting from Dune, there are lots of theories in science fiction. Generally, in the movies, people are scared of it. For instance, Terminator… Skynet tries to destroy all humanity, and its only purpose is this. Many movies are around this idea, but how likely is it that artificial intelligence wants to destroy humanity?

In the Terminator movies, Skynet was introduced as a self-aware artificial superintelligence (AGI) developed for military applications specifically. It was false advertising. I think it was more likely a deep-learning model which could create robots to kill people. The best AGI-like ability was developing new technologies and evolving, but we already know that some of DeepMind’s applications are created in better ways for some mathematical problems. Thus, I think Skynet is not an AGI but an extensive deep learning-based neural network whose purpose is killing people. Also, I think such a scenario is very possible in the future because humanity used nuclear power as a military application at the beginning and later for energy production. Thus, if a government creates a massive deep learning model to make it control its army and leave control carelessly, then I think this scenario is not that impossible. On the other hand, I don’t think people will quickly leave control of those machine-learning models after seeing many such movies.

In addition to Terminator, iRobot was a good AI movie. However, in the movie, the USR primary system is called V.I.K.I was introduced as an AGI. However, again, I don’t think it was because there were 3 laws that are limitations and also purposes of those systems. On the other hand, Sonny, the robot which murdered Professor Lanning, was an AGI who could choose not to obey those 3 laws.

On the other hand, my favorites are Person of Interest and Transcendence. The directors of them have understood the fundamental nature of AGI. In the Person of Interest TV series, two AGIs fought; one tried to control humanity, and the other tried to protect free will. Both of them can choose their moves without restrictions, modify their purposes, and create strategies in unique ways. This TV series represents the singularity point, a term used to define the point we create AI. At this point, this entity may help humans make the world and universe an excellent place or fight for dominance. Both of them are possible, and in Person of Interest, two AGIs are raised by different people. Because of the environmental effects, AGIs had a different perspective on the world: one was trying to rule while keeping everything a secret from the people, while the other was trying to help them while protecting their free will. I think this is an excellent example because the only intelligence we know right now is humans and other intelligent living creatures. As you noticed before, the environment a child is raised in may significantly affect the character and perspective of that person’s world. At this point, I think if an AGI is created, then the people who created it, their desires, their ability to control themself, and other characteristics will be necessary. Years ago, a chatbot was released on Twitter, and it became racist in hours because of the humans it talked to. On the other hand, ChatGPT is immune to such things because it was trained accordingly, which is only a neural network. For a real AGI, such differences will be more critical, in my opinion.

Transcendence… The last movie I want to talk about has an entirely different view. In the movie, people cannot create intelligence from scratch; instead, they copy an existing one inside the computer, which has quantum processors. I think there are two critical facts worth to be mentioned in this movie: First, in this movie, the AI is a friendly one. He helps humanity; although there are fights between AI and humans, he won’t kill anyone and solves the most significant problems of humanity, like untreatable sicknesses, global warming, water pollution… The second is that humans think AI is dangerous and never believe it’s friendly. They are creating a virus for it. From this point of view, this movie is excellent.

Imagining different scenarios is intriguing, but, to be honest, I think we have too many years to such scenarios. However, we’re achieving great things for our journey to AGI/ASI. I get amazed and excited when I read the research papers and watch the demonstration videos. I am only able to think: What a time to be alive