Generative AI Tools Are Better at Images and Speech Than Writing

Ai tools There’s an obvious reason why generative AI tools are so good at creating visual art that would be very time-consuming to create yourself, yet the writing sounds robotic and awful. It has to do with the GenAI training data and the Baroque artist, Rembrandt.

Rembrandt van Rijn, Dutch Golden Age painter, was born on July 15, 1606, in Leiden, Netherlands. Considered one of the best painters ever, he and his students in his Amsterdam-based studio were prolific and mastered the “Baroque style” of lighting and expression. His life’s work is estimated at over 300 paintings, 300 etchings, and 2,000 drawings.

But if a Dutch person met Rembrandt today, the contemporary time traveler wouldn’t be able to understand what Rembrandt was saying. While Rembrandt was fluid in Latin — basically nobody is today — people in the area of the Netherlands were speaking Old Dutch until 1550. At the same time, people were speaking Old English, Old High German. Then there’s what’s called Middle Dutch from 1150 to 1500, and Modern Dutch dates to 1550, roughly. The standardization of Dutch in and around 1637 even included aspects of the Dutch Low Saxon language, so I seriously doubt that Rembrandt grew up speaking 21st century Dutch in his home.

A contemporary of Rembrandt, William Shakespeare, lived from 1564 to 1616. Similar to Old Dutch, Middle English is estimated to date to about the year 1500, leading to Early Modern English until about 1650. While Shakespeare’s writing may still be used in today’s performances of his plays, it’s not especially easy to understand for a modern English speaker.

And that brings me to the reason generative AI is so much better at producing images that look like Rembrandt’s paintings than well-written modern English: it simply has to do with the quality of the training data.

Representational Art Predates Modern English

For as long as people have been making art, they’ve been trying to make representational art. Rembrandt’s paintings from the 1600s are still considered not just masterpieces of Baroque and Renaissance art, but of all art. This means that there are literally hundreds of thousands of masterpieces — extremely beautiful pieces of art — that were used for training tools like DALL-E, Midjourney, and Stable Diffusion.

Moving into the modern era, how many examples of bad art do you see? It’s just extremely rare that somebody bothers to publish their art online unless it’s at least okay. But when it comes to writing, we all learn that in school, while most people stop learning visual art techniques around the age of 18. So only “good” artists publish art, but everyone writes online.

Most people are not good at writing, especially when it comes to technical articles written by a programming enthusiast who may be writing their first article since high school or college. The average blog post is not a masterpiece. So the training data is of a significantly lower quality, and it’s going to be overwhelmingly biased towards this type of wishy-washy, blame-avoidant, corporate nonsense that you hear spewed every day.

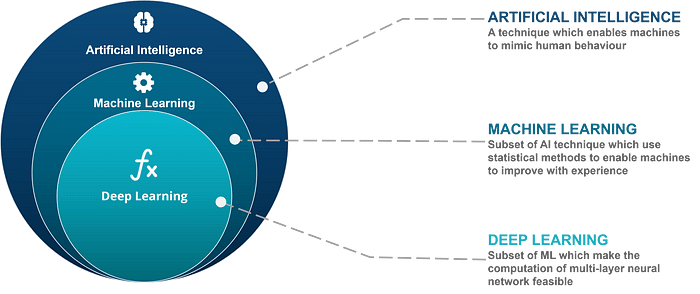

All “AI” Tools Output What They’ve Been Trained On

When you use a tool like ChatGPT, it’s going to imitate the style you see in the newspaper — brief, basic, both sides. It’s not going to output Wikipedia articles unless you ask for that style. When you just ask it to do something like write an article, write a blog post, or help me with this email, it will do it in a way that imitates all these corporate press releases and corporate-edited journalism articles and other source materials.

Those are going to be limited to just the English language, not all artists working worldwide. The training data for a “corpus” of English-language texts (for LLMs like ChatGPT) will include writing that is overwhelming biased toward “what we found online” since the invention of the internet and the explosion and proliferation of writing, mostly since the year 2000.

That explains the difference. It’s the same thing with transcription and speech-to-text tools. Everybody who speaks their mother language speaks it well. I mean, yeah, some people will speak it better than others, but almost universally, a native speaker will be speaking their own language better than a foreign language learner — even somebody who’s invested 10 or more years into learning the foreign language as an adult.

AI Is Much Better at Text-to-Speech & Transcription

For us to be able to understand each other speaking a language, we have to make the same sounds in the same way. So unlike writing, where there’s a lot of crap out there on the internet that’s been absorbed into this training data and reflects in the outputs it generates, pretty much any American English speaker will sound the same.

Yeah, you could group it into accents and countries of origin and word choice preferences and number of words used, size of vocabulary, and everything else. Fundamentally, there’s more accurate training data because native speakers speak so well for producing text-to-speech or the reverse problem of speech-to-text or voice transcription with a tool like OpenAI Whisper that it doesn’t take that much input.

You don’t need all of the world’s masterpieces over the last 500 years. You just need basically any audio. The problem of learning a language and making the correct sounds is much easier for the computer to solve with — OpenAI Whisper was originally trained on 680,000 hours of multilingual data — than creatively writing original thought and opinion articles.

I Know I Don’t Write as Well as Rembrandt Painted

Anyone in the world today would be thrilled if they could paint as well as Rembrandt. Any non-native English speaker would be thrilled to speak English as well as a native speaker. Those are problems with enormous amounts of data. In the case of art, going back 500 or more years of representational art that’s been preserved and cataloged, and when you include all that — plus anime, cartoons, and high-quality fan versions of those — you may easily have a billion images: all representational art.

Even though you may have a trillion articles, the fact is OpenAI didn’t train ChatGPT based on the top 1% of best writing . No, it’s trained on everything. It’s trained on people’s awful writing; it’s trained on books; and it’s trained to regurgitate this corporate nonsense that we have endless reporting of from CEOs avoiding taking any blame for what happens at their companies and throwing people under the bus.

So it’s great at writing something like a corporate email, but it’s not going to be good at writing original, thought-provoking writing of the highest-possible quality, just because there’s so much low-quality training input.

What If You Trained DALL-E Like ChatGPT?

Imagine every person in the world, with their current level of visual art skills, made ten pictures and uploaded them, and that was the training corpus for something like Stable Diffusion or DALL-E.

Do you think it would work very well?

But that’s much closer to what something like ChatGPT is trained on — the entire internet. When it comes to these generative AI tools and use of them, it’s just much easier to take a high quality photo with a modern smart camera than it is to write a 3,000-word high quality article.

Unsplash, for example, has five million photos, and even the crappy ones are still photos — they’re photorealistic captures that are representational art. So it’s much easier to get a photorealistic output from training on photos or masterpieces than if you included everybody’s visual art.

The fact that in terms of the machine learning problem, the input quality is much higher for certain categories — specifically visual art and photography, as well as native speakers speaking their own mother tongues — compared to writing. There aren’t nearly as many interesting, engaging, creative, non-corporate speak examples of writing you can find online, especially about obscure topics that don’t maybe attract the best writers. Most bloggers are just enthusiasts, not Rembrandt-level writers.

Why Gen AI’s Strengths and Weaknesses Matter

The reason this all matters is you can’t expect even ChatGPT 4o (the latest and greatest large language model) to produce high-quality writing at the same level that one of these generative AI tools may produce images.

We can see that in something like video. It’s incredibly difficult to use something like the Sora product or whatever to generate even 30 seconds of video because there aren’t billions of photos and visual images for videos. It’s just a much harder problem. It’s the same thing with articles. How many times have I been frustrated as a professional developer that the documentation for some product I’m locked into using because of some executive decision at the company I’m working? Technical documentation on the internet is overwhelming awful, short, concise, and half useless.

Would you use those words to describe the entire history of visual art as catalogued by museums since the 1600s? Absolutely not.

When it comes to ChatGPT or any other tool that is trying to solve the problem of “writing” (as compared to “representational art”), these companies are training their algorithms on data that sucks. And that will always be 100% is true for any text-based tool (“large language model”), because most writing on the internet isn’t the “best writing in the history of the world” in the way that Rembrandt’s work is the “best oil painting in the history of the world.” Simply, there’s a lot more crappy writing out there than there are crappy photos or crappy pieces of visual art cataloged by the world’s museums from the last 400 or more years.

So what can you do with this information?

Use GenAI for Art and Speech, Not Original Writing

The takeaway I want to leave you with is it doesn’t make a lot of sense to try to use a tool like ChatGPT that was trained on low-quality data and compare its abilities to something that was trained on Rembrandt’s masterpieces.

There’s a 0% chance that I could have generated this article using ChatGPT using these exact words because my writing is just on average much stronger than the “average” writing in ChatGPT’s training data.

But there’s a non-zero chance that if I ask DALL-E or another tool for something resembling a Rembrandt’s masterpiece that it will give me one, because the quality of the training data is extremely high on average.

If you want to use these generative AI tools — outside of any issues related to copyright infringement — you’re going to get more impressive results in terms of the time saved asking generative AI to produce text-to-speech, to transcribe speech-to-text, or to generate realistic or anime-style visual imagery compared to getting it to write a book or an article.

This also answers the question of why it’s so much easier to tell when something was written by a chatbot: it’s too long, it’s too lengthy, it’s too generic, it’s too boring, it’s too repetitive. That pattern resembles a lot of writing online, and especially a lot of journalism, social media posts, and especially corporate-speak memos and press releases.

More importantly, writing isn’t exactly a harder problem to solve than representational art — but there are far fewer examples in the training set of world-class writing than there are of world-class visual art for DALL-E.

Which is why, if you want to apply these generative AI tools in the state of the tools that they are now — and this may be a permanent limitation, because I don’t think they’re eager to reduce the number of tokens that ChatGPT was trained on — then you’re gonna have more success generating images or using text-to-speech or speech-to-text functionality.

Those functions will save you a lot more time than trying to use ChatGPT as a serious writing tool. It just has to do with the quality of the input data — because that affects the quality of the output data. Now you know! 😁